not no

a blog about information science, culture, and new media. Archive

Return to Red Rocks

Rainbow Wall (Original Route)

Red Rocks is probably the most popular multi pitch trad area in the US. It’s not the best one (obviously, that honor goes to Yosemite Valley), but the proximity to the urban conveniences of Las Vegas, the generous bolting, the positive gym-like flakes of patina, and stable weather all contribute to the most accessible long multipitch destination in the country. Not that I’m complaining about the crowds (well, maybe a little bit).

One of the coolest things about Red Rocks is that it offers a nice “ladder” of progressively harder and bigger routes starting way at the easiest end of the grade scale. Climbers return year after year, cutting their teeth on continuously gnarlier objectives. Climb Chrimson Chrysalis (5.8) one year, come back the next for Epinephrine (5.9). Dream about climbing Inti Watana (5.10); tick that one off and start looking at the topo for Levitation 29 (5.11).

Looming over it all (literally) is the Original Route on Rainbow Wall (5.12), visible from almost any point in the Scenic Loop drive. There are more difficult multipitch routes in the canyons that seem very good (Jet Stream 5.13a, in particular), but the Original Route on Rainbow Wall is arguably the second most hard classic multipitch sandstone route in the country (the first place honor goes of course, to Moonlight Buttress in Zion National Park). The OR also has the somewhat niche honor of being perhaps the easiest route that Alex Honnold gained some notoriety from free soloing back in the day. Doing all of “famous Honnold solos” with a rope wouldn’t be a bad lifetime goal for a mere mortal trad climber: Original Route on Rainbow Wall, Moonlight Buttress, Sendero Luminoso, Astroman and Rostrum (in a day), University Wall, Freerider.

I originally planned a trip to try Rainbow wall in spring of 2023, but I broke my ankle that winter, forcing me to cancel my plans. 15 months later, my ankle was (mostly) in serviceable shape and the opportunity presented itself to try the route in May.

I was nervous going in; I hadn’t climbed multipitch trad since the previous summer. Last time Ceri and I climbed together we nearly onsighted Freeway (5.11c) in Squamish. I was absolutely thrashed at the top of the wall, struggling to maintain control on mellow 5.10 terrain. The idea of climbing a longer, harder route guarded by a steep approach after a period of basically no trad climbing practice and certainly zero cardio training seemed laughable. Freeway starts off hard and gradually tapers off; meanwhile Rainbow Walls’ two 5.12a crux pitches come back to back near the top of the climb. But rumors of the route whispered ‘‘the cruxes are brief and well protected… face climbing strength is more important than crack climbing strength… anyone clipping bolts at the Gallery should go clip bolts on Rainbow Wall instead.’’ So I texted Ceri, bought a plane ticket, and started doing rope laps at the gym once a week.

The week before the trip I had done maybe one or two cardio workouts and still couldn’t fathom the idea of climbing 5.12 after a handful of 5.11 pitches in the gym. But I managed to flash Brave New World (5.12a) and Gravity’s Rainbow (5.12c) at the Gunks in a day; my trad leading head and fitness were starting to come back.

Our first objective upon landing in Vegas was Nightcrawler (5.10+), which conveniently lies just across the canyon from Rainbow Wall. Our idea was to familiarize ourselves with the approach, which at a bit over 2 miles and 2000’ of elevation gain we wanted to do as quickly and efficiently on the morning of our Rainbow Wall attempt as possible. Some parties hike in camping gear and bivy at the base of the route. This strategy was out of the question, since our plan was to do the route in a push. We made several wrong turns on our way into Juniper canyon, but took our time and eventually found a reliable and easy trail. Nightcrawler went smoothly, with Ceri linking the first two pitches into a mega rope stretcher before I linked the next two to the top. Despite sending fairly easily, the sandstone felt a bit unfamiliar; we both felt a bit shaky on the rock. The idea of having to climb over twice as many similarly graded pitches on Rainbow Wall before busting out some 5.12 remained intimidating.

After rappelling Nightcrawler we made the game-time decision to jaunt over to the base of Rainbow Wall and stash our climbing gear at the base. This removes some purity from our planned in-a-day ascent, but given that we were already deep in Juniper Canyon it almost seemed more contrived not to. The approach slabs proved incredibly punishing on the quads, although the “death slab” portion proved to be a lot more mellow than we anticipated. We deposited our planned rack, rope, harnesses, helmets, climbing shoes discreetly under a boulder and hobbled out of the canyon.

36 hours later, we were on our way. We left the Pine Creek parking lot at 6:25AM only with daypacks filled with water and food, posing as simple hikers to the handful of parties eagerly racking up by their cars. Thanks to our rehearsal and our light packs the approach went smoothly, making it to the base of the wall in about 1:40. A couple other parties showed up shortly after we arrived, and we began the multipitch ritual of trying to size each other up to see if anyone would be a liability on the wall.

Ceri’s block was up first; the plan was for her to lead all the way to the end of the main corner system, dispatching several pitches between 5.10 and 5.11, while I would take the crux pitches in the second half of the route. Shade had crept onto the first pitch and the party behind us began to anxiously arrange their gear, waiting for us to start. There were no more excuses; it was go time.

The climbing of the first few pitches proved to be mostly beautiful face climbing on my favorite Red Rock rock - solid chocolatey varnish, and big open handed holds. The cruxes proved to be mostly brief and well protected, and we quickly started to put some distance between us and the parties below. After breezing through the initial 6 pitch corner system Ceri simuled confidently through the scrambly section before it was my turn to lead. Some filler climbing deposited us at the base of the crux pitches of the route: two back to back 5.12 corner pitches in the “red dihedral.” I was afraid of sustained slippery finger crack laybacking and was pleasantly surprised to find (again) well protected, short crux sections with plenty of time to feel all the crux holds before committing to just one or two hard moves before gaining easier terrain.

7 hours later, we were on top of the route. I had onsighted or flashed every pitch, and Ceri took only a couple of falls. On our way down, we discussed whether the route was soft, or whether we had truly grown as climbers. The answer is probably a bit of both; the route, true to the rumors, features just so many bolts and a lot of face climbing. The longest stretch between comfortable stances is probably the 25 foot steep section on the second Red Dihedral pitch - most of the climbing on the route is really in the 5.10 or easier range. The difficult pitches are not necessarily mis-graded; the pulls are hard, but the moves are intuitive and use fairly ergonomic body positions.

Regardless, we were stoked to pull off a fast and fairly clean ascent of one of the hardest and most classic multipitch routes in the country.

Approach Beta

Stay on the Pine Creek trail for longer than you think, until you get to the Knoll Trail sign turnoff. Follow this trail that cuts across the hill to Juniper Canyon, eventually depositing you in the wash significantly higher than if you try to approach Juniper canyon directly. Boulder hop for about 10 minutes, passing the attractive problem Stand and Deliver V11, before cutting up left to the other side of the wash and gaining another easy trail that eventually takes you to the approach slabs of Rainbow Wall. The death slabs are fairly mellow if you traverse quite far left and then back right. Follow the path of least resistance to the base of the route.

Pitch By Pitch

P1 var. 5.11a. Rated 5.11c in the guidebook, 5.11a felt more appropriate. Follow the ramp/crack feature diagonally right to the main corner system. This pitch is mostly face climbing. The 12b original first pitch looks very high quality as well with only 1 hard move. The belay here is hanging - linking 1/2 would be pretty mega.

P2 5.11c/d. (Guidebook 5.11d). One of my favorite pitches of the route. Combine face climbing and laybacking through awesome open handed grips and beautiful varnish through a wandering line to the anchor. Belay stance is just OK here.

P3 5.10c (Guidebook 5.11a) Go up the corner. A good pitch, but kinda unmemorable. There’s a really good belay stance 15’ before the anchor - a few hand size cams makes the belay much more comfortable.

P4 5.10d (Guidebook 5.11a) Climb past the freaky pillar and through the roof. This roof is way easier than the roof on Monkeyfinger in Zion. Rock quality begins to deteriorate here. It’s not choss, but just not as amazing as the lower pitches. OK belay.

P5 5.10b Climb up the corner, past an optional belay, to the top of the main corner system.

P6 4th/low 5th simul for 350’ up and right to an exposed step around a corner and up a face to Over the Rainbow Ledge.

P7 5.7 Short traverse to the left over junky rock and loose blocks to an anchor. I downclimbed a fair bit at some points. We belayed at the lower station to eliminate rope drag.

P8 (Red Dihedral P1) 5.12a Launch up easy terrain to the base of the Red Dihedral. Bust out literally 1 hard move to gain a stance below the more famous boulder problem on the pitch and imagine free soloing it like Honnold. Choose your own adventure to gain the jug and enjoy really fun 5.11- stemming and crack climbing on amazing rock.

P9 (RD P2) 5.12a The hardest pitch on the route, probably. Sustained layback and stemming with good holds that are sometimes a bit too far apart. You actually have to place gear in strenuous positions on this pitch! But the difficulties end fairly shortly. Climb more 5.11- to a weird stance to the left

P10 5.11a. Consider going back right and doing the 5.12b variation in the corner. Direct line, but worse rock quality. The 5.11a pitch is probably 5.10- if you downclimb a bit and climb the right ramp. However, there’s a fun little balance move if go direct off the belay, balancing on one good foot and cartwheel grab the ramp.

P11 5.10. Climb standard Red Rock patina to the summit.

Selective exposure, equilibrium effects, feedback loops

Wow! Andrew Guess and coauthors have published in Science a paper very similar to the one I described six months ago, collaborating with Facebook researchers to turn off ranked news feed in favor of chronological for radom users. As we might have anticipated, results are basically null, except that people clearly like chronological feed less and use Facebook/IG less often when it is turned on.

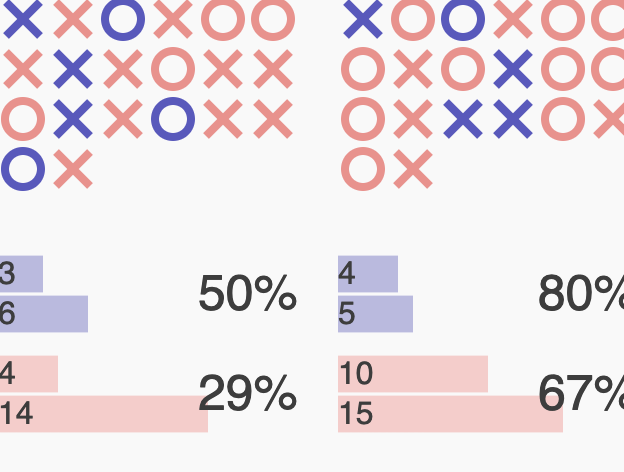

The idea that algorithm driven echo chambers causes polarization is just so painfully slippery. It sounds so plausible, but the issue is that basically no study has found evidence of this. Guess’s paper is basically state of the art when it comes to evaluating this question: turn off the algorithm, observe that like-minded exposure goes down and out-partisan content goes up, observe that polarization is unchanged. The conclusion that algorithm driven selective exposure doesn’t have an effect on polarization is hard to escape.

So the matter is finally settled? It’s hard to say. The idea seems like it will never die.

In my own research agenda, I’m working on understanding the same-but-opposite treatment; drastically increasing cross-cutting information (rather than scaling back like-minded, and hoping that some cross-cutting information takes its place). Much of the existing work on cross-cutting, or out-partisan news in social media is very good, but a lot of it tends to be rather conservative in treatment strength.

Looking forward, ranking algorithms may hve (tiny) direct effects, but what I’m really interested in are the equilibrium and feedback effects. How does the ranking algorithm (or more broadly, the way in which information is curated, distributed and consumed) change the way media companies, politicians, and influencers create content, and what are the political implications?

More on experimental equilibrium effects: a story from a particular social media company

Back in the day when I used to work at a particular social media company I saw an internal report describing how ranking, or the process of changing the order of content in user feeds, was provably a good thing. This was in the face of both public pressure from scholars and commentators describing how content ranking algorithms created bias and opportunities for controversial, divisive content to go viral and other kinds of bad things.

The authors of the report had run a randomized experiment turning off ranking for a small portion of users for a few weeks. They showed that these users spent less time using the social media platform, scrolled past more stories, engaged less with meaningful content, and a whole host of other “negative” effects. Putting aside the obvious conflict of interest of some of these measurements (as in, maybe less time spent is actually a good thing, societally speaking), this is a great example of the research design not taking into account equilibrium effects.

For years the existence of the ranking algorithm has influenced both the types of content produced both by content creators and by the social media company itself. Turning off the ranking algorithm for a small subset of users for just a couple weeks is not a good hint at what the world would look like in the absence of a ranking algorithm.

If you have a ranking algorithm, there’s no penalty for just firehosing tons of content into the ether, relying on the algorithm to pick out the cream of the crop to bring to the top. If you turn off the algorithm, everyone will hate you because they are suddenly inundated with terrible content. But in a world that never had an algorithm in the first place, it’s unlikely that so much mediocre content would exist in the first place.

More

Sign up for the mailing list